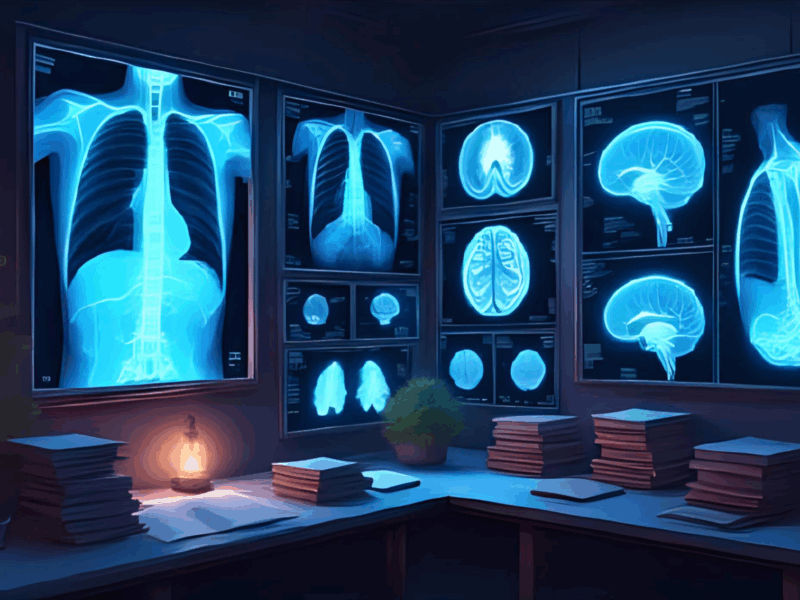

ViT vs CNN: Vision Transformers and Convolutional Neural Networks in Medical Image Analysis

I read this article today and took some notes. I want to mention it here for my future work and to look again. The article is titled: Comparison of Vision Transformers and Convolutional Neural Networks in Medical Image Analysis: A Systematic Review1Takahashi, S., Sakaguchi, Y., Kouno, N. et al. Comparison of Vision Transformers and Convolutional Neural Networks in Medical Image Analysis: A Systematic Review. J Med Syst48, 84 (2024). https://doi.org/10.1007/s10916-024-02105-8. Published in the Journal of Medical Systems.

In medical imaging, artificial intelligence (AI) has enabled significant breakthroughs in disease detection, segmentation, and classification. Two architectures—Convolutional Neural Networks (CNNs) and Vision Transformers (ViTs)—dominate current research. CNNs have been around longer and are well-tested, while ViTs bring innovations from natural language processing (NLP) to image analysis. Understanding the strengths and weaknesses of these approaches is crucial for choosing the right tool in clinical and research applications.

Convolutional Neural Networks (CNNs)

The strength of CNNs lies in the concept of convolution, where small filters (kernels) slide over images to detect local patterns—edges, shapes, and textures. As these kernels stack in deeper layers, the network can learn increasingly complex representations.

![Rendered by QuickLaTeX.com \[ (I * K)(x, y) = \sum_{i=0}^{a} \sum_{j=0}^{b} I(x + i, y + j)\,K(i, j) \]](https://aslan.md/wp-content/ql-cache/quicklatex.com-eaa4c6e8183937b57ee8d60c0f64032a_l3.png)

CNN Advantages in Medical Imaging

- Localized Feature Extraction: Convolution kernels capture intricate details—like subtle tumors on an MRI scan or microcalcifications in a mammogram.

- Parameter Sharing: By applying the same kernels across different parts of the image, CNNs reduce the number of parameters, helping them train effectively without requiring extremely large datasets.

- Efficiency on Modern Hardware: Convolutions can be parallelized well on GPUs, making CNNs computationally efficient for many tasks.

CNN Challenges

- Limited Receptive Field: While convolutions capture local features, they can struggle to model global relationships or long-range dependencies.

- Explainability: CNNs often act as “black boxes,” providing limited insight into how they arrive at a particular diagnosis. Techniques like Grad-CAM exist, but interpretability remains a work in progress.

- Domain Shift: CNNs can falter if confronted with images taken by different scanners, from different hospitals, or under different conditions than their training data.

Despite these limitations, CNNs have achieved near-human or sometimes human-level accuracy in tasks like lesion segmentation, disease classification, and pathology detection, making them a staple in medical imaging.

Vision Transformers (ViTs)

Originally a game-changer in natural language processing (NLP) (think GPT and BERT), transformer architectures soon ventured into vision. The key innovation is the self-attention mechanism, which learns to focus on the most important parts of the input data without the use of recurrent or convolutional layers.

![]()

How ViTs Work?

- Patch-Based Approach: An image is split into patches (e.g., 16×16). Each patch is treated like a “token” (similar to how words are treated in NLP).

- Self-Attention: The transformer computes relationships across all pairs of tokens (patches) in parallel, generating an attention map that highlights the regions most relevant to the task.

- Parallel Processing: Unlike CNNs that slide filters across an image, ViTs analyze the entire image at once, capturing both global and local patterns.

ViT Advantages in Medical Imaging

- Global Context: ViTs excel at modeling long-range dependencies, crucial for tasks like identifying a tumor’s boundaries across an entire scan.

- Explainability via Attention Maps: Self-attention often yields easily visualizable attention maps that show precisely which parts of the image the model focuses on.

- Rapid Progress with Foundation Models: Large-scale, pre-trained ViTs (e.g., those trained on ImageNet or medical-focused datasets) can be fine-tuned for various medical tasks.

ViT Challenges

- Data-Hungry: Transformers generally require significantly more training data to avoid overfitting.

- Computational Cost: The attention mechanism, which compares every patch to every other patch, can be resource-intensive, especially for large images.

- Less Mature in Medical Imaging: CNNs have been around longer, meaning best practices for ViTs (e.g., dealing with smaller, specialized medical datasets) are still evolving.

CNNs vs ViTs in Medical Imaging

Classification

- CNNs: Often remain the go-to, especially if you have well-organized, moderately sized datasets. CNNs are also effective in tasks like pneumonia detection, fracture classification, or identifying cancerous lesions in histopathology images.

- ViTs: Perform extremely well if you can leverage transfer learning from large pre-trained models, or if you have a sufficiently large dataset to train from scratch.

Segmentation

- ViTs (particularly hybrid or pure transformer-based models) often shine here, thanks to their ability to incorporate global information. They excel at complex tasks like delineating brain tumors, segmenting organs, or finding microscopic structures in histopathology slides.

Robustness and Generalization

- CNNs: Tend to perform better when you have domain-specific knowledge (e.g., specialized CNN architectures for lung CT scans). They can still suffer from domain shift if the test data vary greatly from training data.

- ViTs: Show promise in generalizing to new, unseen data, but this can require substantial fine-tuning. Pre-training on a large dataset like ImageNet often improves a ViT’s robustness.

ViT or CNN: Cheatsheet

- Task Type

- If you’re building a classification system with moderately sized data, CNNs are a strong, efficient choice.

- If you need segmentation or tasks requiring a detailed global view (such as registering different scans of the same patient), ViTs or attention-augmented models often provide superior results.

- Dataset Size and Quality

- Small Datasets: Start with a CNN or a ViT that’s pre-trained on a large benchmark (e.g., ImageNet). Transformers generally need more data to achieve top performance.

- High-Resolution Images: ViTs can struggle if images are extremely large or if patching them leads to data loss. However, novel techniques (like hierarchical transformers) can mitigate these issues.

- Available Computational Resources

- Limited Hardware? CNNs are more resource-friendly and typically train faster.

- Ample GPU Power and RAM? ViTs’ attention mechanism can be parallelized, but be prepared for larger memory footprints.

- Explainability Needs

- If clinical validation requires robust, transparent explanations, ViTs offer clearer attention maps.

- If you only need a broad confidence level, CNNs might suffice, potentially combined with post-hoc interpretability methods.

- Future-Proofing

- Transformers are rapidly becoming the standard in many fields, with “foundation models” (pre-trained on massive datasets) showing up in medical imaging (e.g., Meta’s Segment Anything Model, MedSAM).

- Even if your immediate task is smaller, adopting a transformer-based approach could pay off long-term as the ecosystem around ViTs continues to grow.

Conclusion

CNNs revolutionized medical imaging by enabling computers to detect diseases with high accuracy and speed. However, vision transformers (ViTs) are proving to be a powerful contender, thanks to their global context modeling and more intuitive explainability.

If you’re working on a data-rich project with a heavy focus on transparency and complex segmentation, ViTs—especially with a solid pre-training strategy—are likely your best option. For smaller datasets or projects requiring faster, more resource-efficient solutions, CNNs remain a robust and proven choice.

References

- 1Takahashi, S., Sakaguchi, Y., Kouno, N. et al. Comparison of Vision Transformers and Convolutional Neural Networks in Medical Image Analysis: A Systematic Review. J Med Syst48, 84 (2024). https://doi.org/10.1007/s10916-024-02105-8